wICE hardware#

wICE is KU Leuven/UHasselt’s latest Tier-2 cluster. It has thin nodes, large memory nodes, interactive nodes and GPU nodes. This cluster is in production since February 2023 and has been extended with additional compute nodes in early 2024.

Hardware details#

240 thin nodes

172 IceLake nodes

2 Intel Xeon Platinum 8360Y CPUs@2.4 GHz (IceLake), 36 cores each

(1 NUMA domain and 1 L3 cache per CPU)256 GiB RAM (memory bandwidth and latency measurements)

default memory per core is 3400 MiB

960 GB SSD local disk

partitions

batch|batch_long|batch_icelake|batch_icelake_long, submit options

68 Sapphire Rapids nodes

2 Intel Xeon Platinum 8468 CPUs (Sapphire Rapids), 48 cores each

(4 NUMA domains and 1 L3 cache per CPU)

The base and max CPU frequencies are 2.1 GHz and 3.8 GHz, respectively.256 GiB RAM

default memory per core is 2500 MiB

960 GB SSD local disk

partitions

batch_sapphirerapids|batch_sapphirerapids_longsubmit options

5 big memory nodes

2 Intel Xeon Platinum 8360Y CPUs@2.4 GHz (Ice lake), 36 cores each

(2 NUMA domains and 1 L3 cache per CPU)2048 GiB RAM

default memory per core is 28000 MiB

960 GB SSD local disk

partition

bigmem, submit options

1 huge memory node

2 Intel Xeon Platinum 8360Y CPUs (Ice lake), 36 cores each

(1 NUMA domain and 1 L3 cache per CPU)

The base and max CPU frequencies are 2.4 GHz and 3.5 GHz, respectively.8 TiB RAM

default memory per core is 111900 MiB

960 GB SSD local disk

partition

hugemem, submit options

8 GPU nodes

4 nodes with 16 A100 GPUs in total

2 Intel Xeon Platinum 8360Y CPUs@2.4 GHz (Ice lake), 36 cores each

(2 NUMA domains and 1 L3 cache per CPU)512 GiB RAM

default memory per core is 7000 MiB

4 NVIDIA A100 SXM4, 80 GiB GDDR, connected with NVLink

960 GB SSD local disk

partition

gpu|gpu_a100, submit options

4 nodes with 16 H100 GPUs in total

2 AMD EPYC 9334 CPUs (Genoa), 32 cores each

(4 NUMA domains and 4 L3 caches per CPU)

The base and max CPU frequencies are 2.7 GHz and 3.9 GHz, respectively.768 GiB RAM

default memory per core is 11700 MiB

4 NVIDIA H100 SXM5, 80 GiB HBM3, connected with NVLink

960 GB SSD local disk

partition

gpu_h100, submit options

4 interactive nodes

2 Intel Xeon Gold 8358 CPUs@2.6 GHz (Ice lake), 32 cores each

(2 NUMA domains and 1 L3 cache per CPU)512 GiB RAM

default memory per core is 2000 MiB

1 NVIDIA A100, 80 GiB GDDR

960 GB SSD local disk

partitions

interactive, submit options

1 debug node

2 Intel Xeon Gold 8358 CPUs@2.6 GHz (Ice lake), 32 cores each

(2 NUMA domains and 1 L3 cache per CPU)512 GiB RAM

default memory per core is 7500 MiB

1 NVIDIA A100, 80 GiB GDDR

960 GB SSD local disk

partitions

gpu_a100_debug, submit options

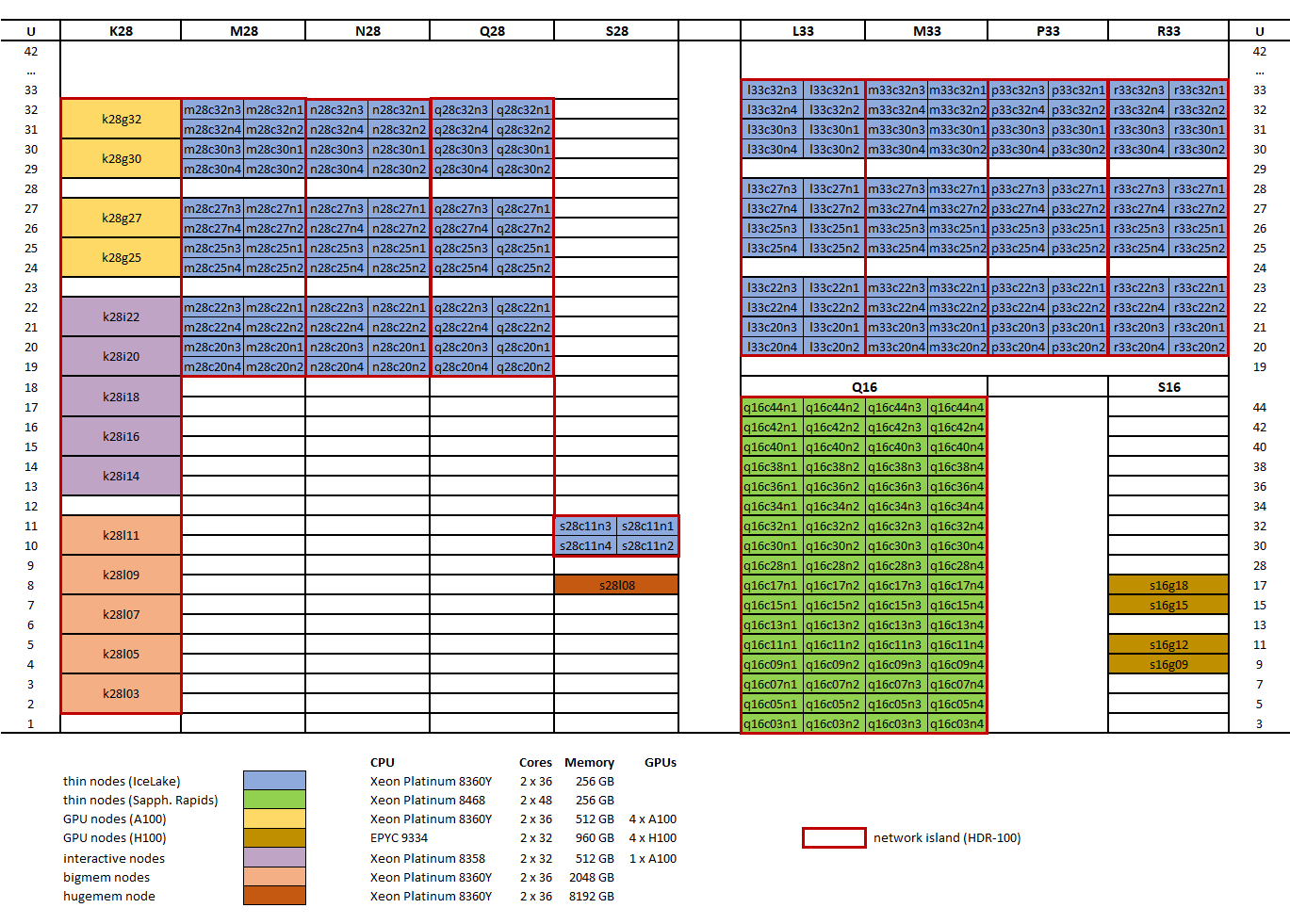

All nodes of the same type are interconnected using an Infiniband HDR-100 network, except the H100 GPU and hugemem nodes which can only communicate over ethernet (no high-performance interconnect). The corresponding network islands are indicated on the diagram below. All nodes are furthermore connected to the Lustre parallel file system through an Infiniband HDR-100 network.

The Sapphire Rapids and H100 GPU nodes are the first ones in the data center to be direct liquid cooled.